🛠️Model Testing - Test Jobs

What is Model Testing - Test Jobs?

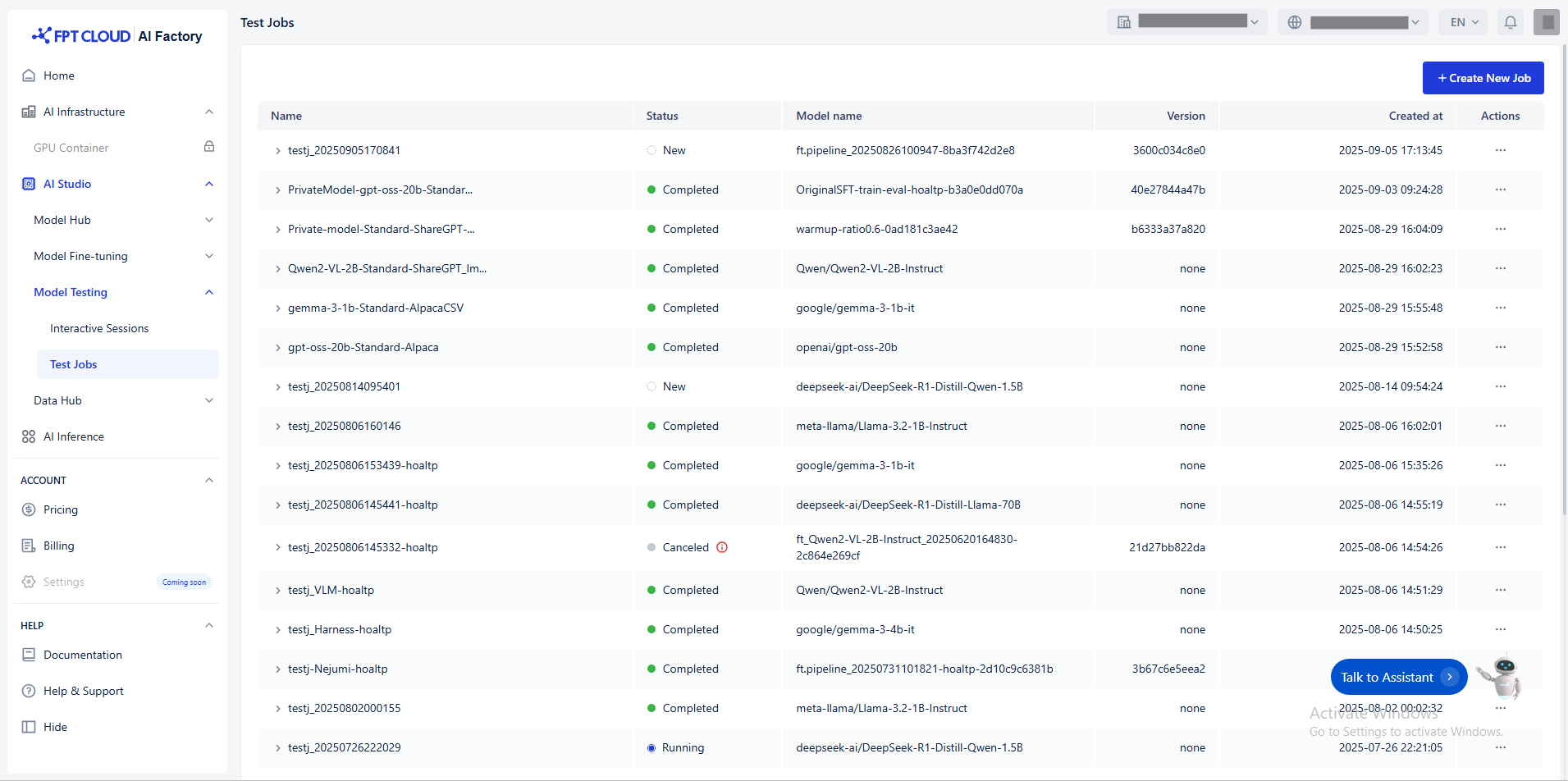

Model Testing - Test Jobs is a core feature of the FPT AI Factory Portal that provides a structured and automated way to evaluate fine-tuned AI models. Unlike Interactive Sessions - which focus on real-time, manual interactions, Test Jobs are designed for large-scale, repeatable testing using predefined datasets.

Key capabilities of Test Jobs:

Automated Evaluation: Run large-scale tests using structured input data to evaluate model responses without manual intervention.

Custom Test Sets: Upload domain-specific datasets tailored to your business cases (e.g., custom queries, legal documents, medical records)

Standardized Test Sets: Leverage publicly available benchmarks developed be researchers to evaluate models against industry standards (e.g., Nejumi Leaderboard 3, LM Evaluation Harness, VLM Evaluation Kit)

Performance Metrics: Analyze model outputs using quantitative and qualitative metrics.

Model Testing - Test Jobs ensures that your AI model is not only responsive in live interactions but also robust, consistent, and scalable across a wide range of inputs. It’s an essential step before deployment, especially for high-stakes applications in industries like finance, healthcare and legal services.

When to Use Model Testing - Test Jobs?

Model Testing - Test Jobs is most valuable when you need to evaluate the overall performance, reliability, and scalability of a fine-tuned model before deployment.

You should use Test Jobs when:

You want to validate model performance at scale.

You want to track improvements across model versions.

You require quantitative performance metrics.

Last updated

Was this helpful?